Monitoring brand visibility used to be straightforward. You opened Google Search Console, checked impressions, clicks, branded queries, and landing pages, and you had a clear picture of how people found your company.

That model is no longer sufficient.

People’s behaviour has changed. Search went from a single-engine search dominated by Google for a better part of the last 25 years, to a multi-engine ecosystem that now includes ChatGPT, Perplexity, Gemini, and other LLMs with a role that can’t be ignored.

Users now get answers from systems that combine retrieval with model-generated text, update outputs frequently, and do not follow ranking logic. On a daily basis, users search/prompt:

1. Google: ~ 14 billion times

2. ChatGPT: ~ 2.5 billion times

This expansion has influenced the tracking of organic visibility, making it much harder – not just because there are more engines, but because LLMs operate fundamentally differently from Google.

This article focuses on how to track your brand across these AI systems, what data actually matters, and which new AI SEO KPIs companies should adopt to measure visibility in an environment where Google metrics alone no longer provide the full picture.

AI Search is not Google, which makes tracking AI visibility much more complex.

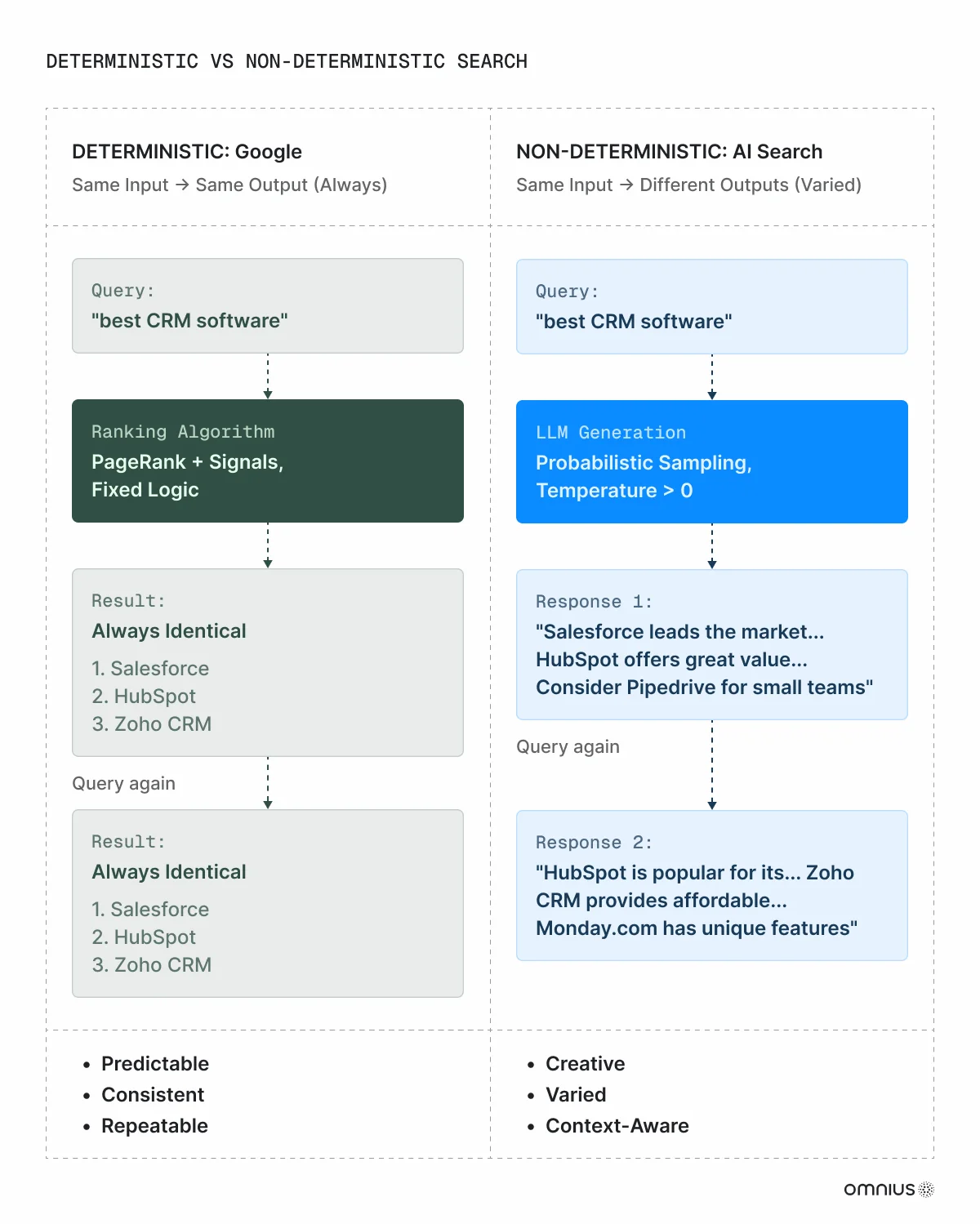

Google’s search results are largely deterministic: two users searching the same query will usually see the same ranked links, with only minor variations based on location or personalization.

AI search is non-deterministic. Responses are generated probabilistically, influenced by

- Prompt phrasing,

- Semantic nuance,

- Chat history,

- Model version,

- Retrieval mode,

- Temperature settings, and

- The user’s context.

The same question asked twice can produce different outputs.

Because of this, a single snapshot tells you almost nothing about your actual visibility. Tracking requires repeated runs, averaged outputs, prompt clustering, and comparisons across engines.

And this complexity scales fast.

Instead of one dominant engine, we now have multiple independent systems – ChatGPT, Gemini, Perplexity, Copilot, Claude, and region-specific LLMs, each with its own training data, retrieval logic, recency window, and citation behavior.

Visibility in one engine does not imply visibility in another.

The result is a measurement environment that behaves more like observational analytics than rank tracking. To understand how your brand appears across these systems, you need multi-run sampling, multi-model comparisons, domain-level citation mapping, and platform-level reporting.

Traditional SEO workflows only cover a small fraction of what’s happening.

AI search introduces variability, personalization, volatility, and prompt dependency – factors that make tracking significantly more complex than anything in the good old times of the Google-only era.

Why should you be tracking AI search?

There is no need to debate whether AI search is growing. As mentioned earlier, ChatGPT alone processes more than 2.5 billion prompts per day, without counting Perplexity, Gemini, Copilot, Claude, or geo-specific models.

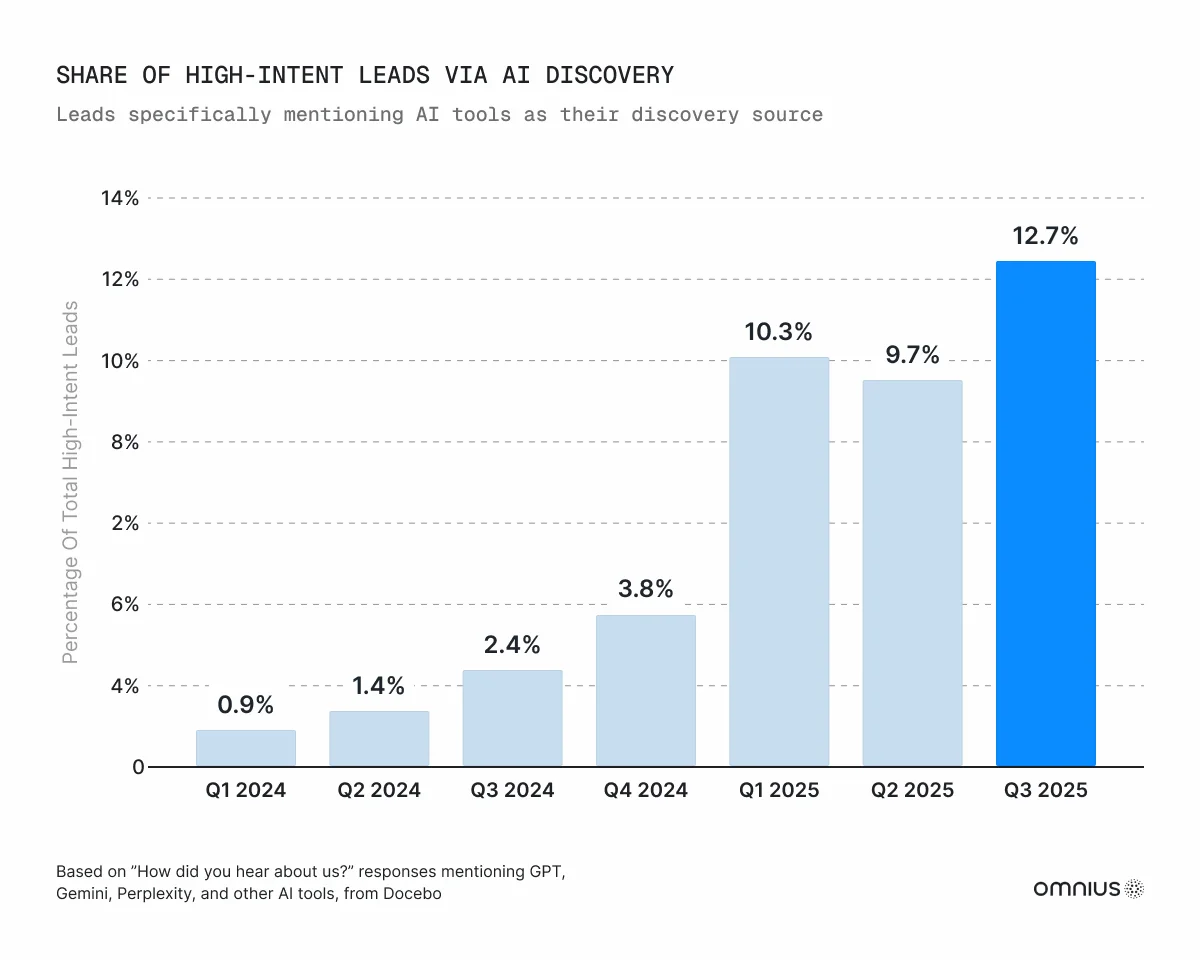

A measurable share of websites (63%) now receives traffic from AI search, and user adoption is exponentially increasing (±527% YoY). People coming from LLMs are also typically more informed and further down the funnel, which leads to higher conversion rates and stronger intent signals.

AI search is not replacing Google, but it is definitely becoming an unavoidable part of how people evaluate information, compare tools, and make purchase decisions; that’s no longer experimental.

And the AI search opportunity couldn’t be more obvious.

For companies, this creates a simple operational truth: you cannot improve what you cannot see. The only way to understand how your brand is represented across AI engines is to track them directly.

Why is tracking only Google no longer sufficient?

The problem is that most organisations still rely exclusively on Google Search Console and GA4 to understand visibility. This creates an incomplete picture.

Google’s data reflects deterministic ranking results, while AI engines operate on non-deterministic logic.

Outputs vary based on model version, recency window, retrieval depth, user context, conversation history, and prompt phrasing. Because of this, AI visibility requires more nuanced and more frequent measurement than Google provides.

There’s no 1-1 correlation between the success on Google & AI engines.

Multiple independent studies confirm this. For example, Ahrefs found that, on average, only 12% of links cited by assistants like ChatGPT, Gemini, and Copilot appear in Google’s top 10 for the same query, and that 28% of ChatGPT’s most-cited pages have zero organic visibility in Google.

Many companies that rank well in Google appear inconsistently or inaccurately inside LLM answers for reasons that traditional analytics cannot detect – outdated documentation, weak third-party authority, poor citation coverage, missing comparison content, or simply because the model’s recency filters exclude older materials.

This is why Google-only tracking is no longer sufficient. It cannot measure:

- Non-deterministic answer variability

- Model-to-model differences

- Citation patterns across engines

- Recency filters that suppress older brand content

- Prompt-dependent shifts in brand representation

Tracking AI search visibility requires a bit more complex approach: repeated runs, averaged outputs, prompt clustering, multi-engine comparisons, domain-level citation mapping, and platform-level reporting.

These elements cannot be replicated with Google Search Console, GA4, or standard BI dashboards.

To achieve objectivity in how your brand actually appears across AI systems, Google metrics must be supplemented with AI-specific tracking tools that reflect how modern engines work. Without this layer, organisations operate with incomplete visibility and risk, making decisions based on misleading or outdated information.

How AI Visibility tracking works

Before we start, we need to understand the foundation of how AI visibility tracking works in the backend. To measure brand visibility inside ChatGPT, Gemini, Perplexity, Copilot and other engines, tools rely on two core data sources:

- Evidence-based user behavior

- Synthetic model output sampling

Everything we see in dashboards, reports, or visibility scores is a product of how these two layers are collected, processed, and combined.